7 Monte Carlo integration

A typical usage of simulation of random variables is Monte Carlo integration. With \(X_1, \ldots, X_n\) i.i.d. with density \(f\) \[\hat{\mu}_{\textrm{MC}} := \frac{1}{n} \sum_{i=1}^n h(X_i) \rightarrow \mu := E(h(X_1)) = \int h(x) f(x) \ \mathrm{d}x\] for \(n \to \infty\) by the law of large numbers (LLN).

Monte Carlo integration is a clever idea, where we use the computer to simulate i.i.d. random variables and compute an average as an approximation of an integral. The idea may be applied in a statistical context, but it way also have applications outside of statistics and be a direct competitor to numerical integration. By increasing \(n\) the LLN tells us that the average will eventually become a good approximation of the integral. However, the LLN does not quantify how large \(n\) should be, and a fundamental question of Monte Carlo integration is therefore to quantify the precision of the average.

This chapter first deals with the quantification of the precision — mostly via the asymptotic variance in the central limit theorem. This will on the one hand provide us with a quantification of precision for any specific Monte Carlo approximation, and it will on the other hand provide us with a way to compare different Monte Carlo integration techniques. The direct use of the average above requires that we can simulate from the distribution with density \(f\), but that might have low precision or it might just be plain difficult. In the second half of the chapter we will treat importance sampling, which is a technique for simulating from a different distribution and use a weighted average to obtain the approximation of the integral.

7.1 Assessment

The error analysis of Monte Carlo integration differs from that of ordinary (deterministic) numerical integration methods. For the latter, error analysis provides bounds on the error of the computable approximation in terms of properties of the function to be integrated. Such bounds provide a guarantee on what the error at most can be. It is generally impossible to provide such a guarantee when using Monte Carlo integration because the computed approximation is by construction (pseudo)random. Thus the error analysis and assessment of the precision of \(\hat{\mu}_{\textrm{MC}}\) as an approximation of \(\mu\) will be probabilistic.

There are two main approaches. We can use approximations of the distribution of \(\hat{\mu}_{\textrm{MC}}\) to assess the precision by computing a confidence interval, say. Or we can provide finite sample upper bounds, known as concentration inequalities, on the probability that the error of \(\hat{\mu}_{\textrm{MC}}\) is larger than a given \(\varepsilon\). A concentration inequality can be turned into a confidence interval, if needed, or it can be used directly to answer a question such as: if I want the approximation to have an error smaller than \(\varepsilon = 10^{-3}\), how large does \(n\) need to be to guarantee this error bound with probability at least \(99.99\)%?

Confidence intervals are typically computed using the central limit theorem and an estimated value of the asymptotic variance. The most notable practical problem is the estimation of that asymptotic variance, but otherwise the method is straightforward to use. A major deficit of this method is that the central limit theorem does not provide bounds – only approximations of unknown precision for a finite \(n\). Thus without further analysis, we cannot really be certain that the results from the central limit theorem reflect the accuracy of \(\hat{\mu}_{\textrm{MC}}\). Concentration inequalities provide actual guarantees, albeit probabilistic. They are, however, typically problem specific and harder to derive, they involve constants that are difficult to compute or estimate, and they tend to be pessimistic in real applications. The focus in this chapter is therefore on using the central limit theorem, but we do emphasize the example in Section 7.2.2 that shows how potentially misleading confidence intervals can be when the convergence is slow.

7.1.1 Using the central limit theorem

The CLT gives that \[\hat{\mu}_{\textrm{MC}} = \frac{1}{n} \sum_{i=1}^n h(X_i) \overset{\textrm{approx}} \sim \mathcal{N}(\mu, \sigma^2_{\textrm{MC}} / n)\] where \[\sigma^2_{\textrm{MC}} = V(h(X_1)) = \int (h(x) - \mu)^2 f(x) \ \mathrm{d}x.\]

We can estimate \(\sigma^2_{\textrm{MC}}\) using the empirical variance \[\hat{\sigma}^2_{\textrm{MC}} = \frac{1}{n - 1} \sum_{i=1}^n (h(X_i) - \hat{\mu}_{\textrm{MC}})^2,\]

then the variance of \(\hat{\mu}_{\textrm{MC}}\) is estimated as \(\hat{\sigma}^2_{\textrm{MC}} / n\) and a standard 95% confidence interval for \(\mu\) is \[\hat{\mu}_{\textrm{MC}} \pm 1.96 \frac{\hat{\sigma}_{\textrm{MC}}}{\sqrt{n}}.\]

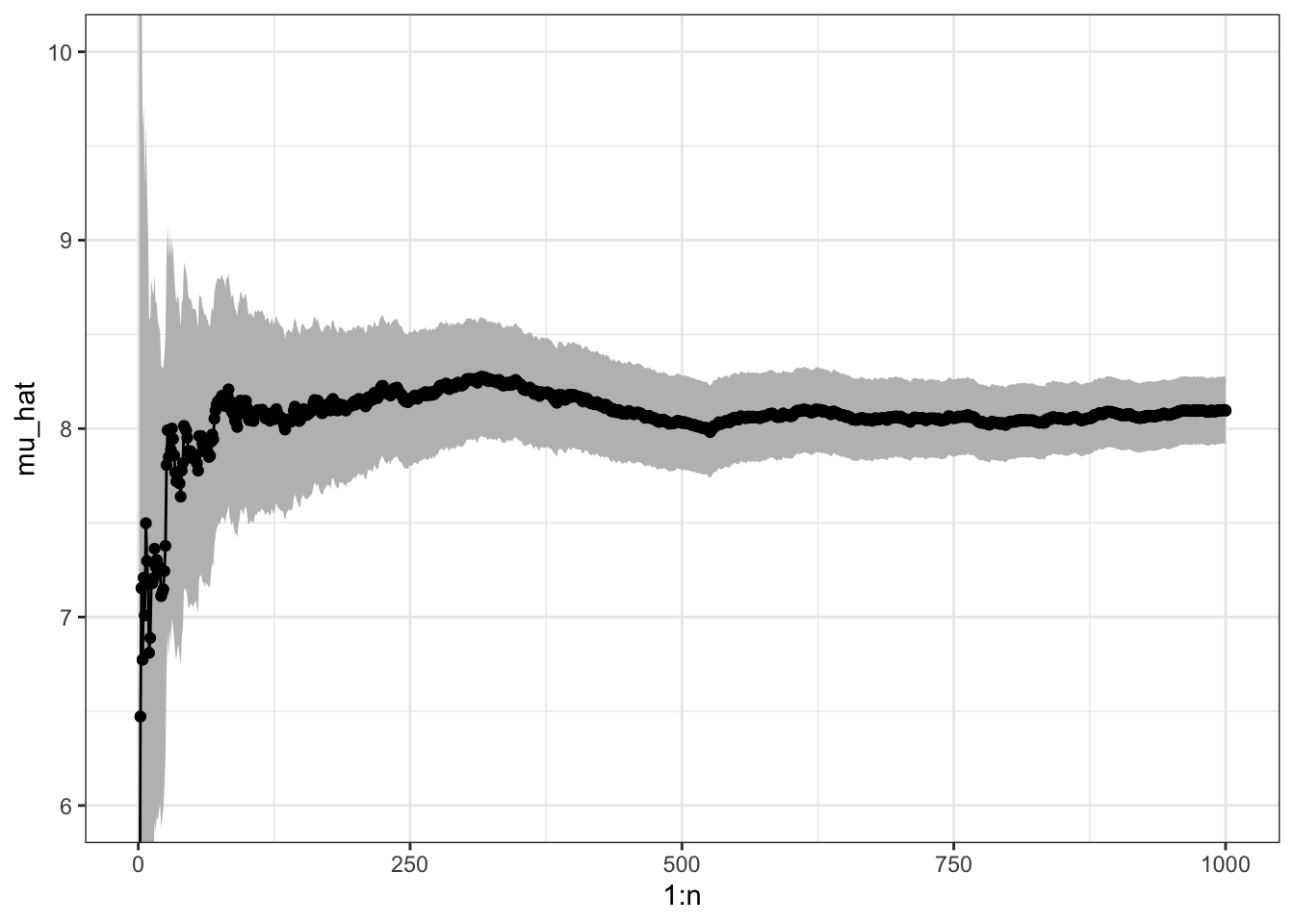

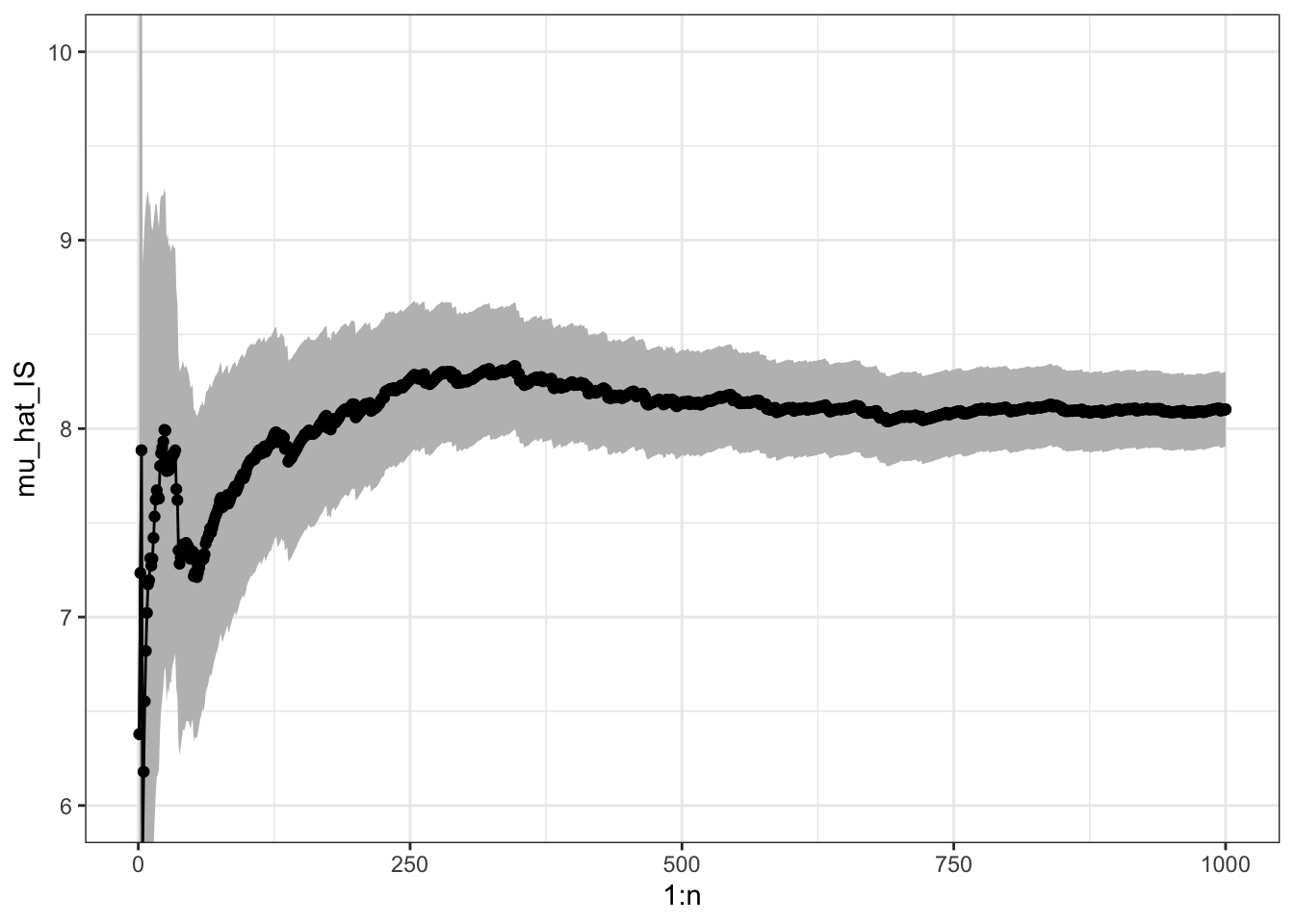

n <- 1000

x <- rgamma(n, 8) # h(x) = x

mu_hat <- (cumsum(x) / (1:n)) # Cumulative average

sigma_hat <- sd(x)

mu_hat[n] # Theoretical value 8## [1] 8.096

sigma_hat # Theoretical value sqrt(8) = 2.8284## [1] 2.862

ggplot(mapping = aes(1:n, mu_hat)) +

geom_point() +

geom_ribbon(

mapping = aes(

ymin = mu_hat - 1.96 * sigma_hat / sqrt(1:n),

ymax = mu_hat + 1.96 * sigma_hat / sqrt(1:n)

), fill = "gray") +

coord_cartesian(ylim = c(6, 10)) +

geom_line() +

geom_point()

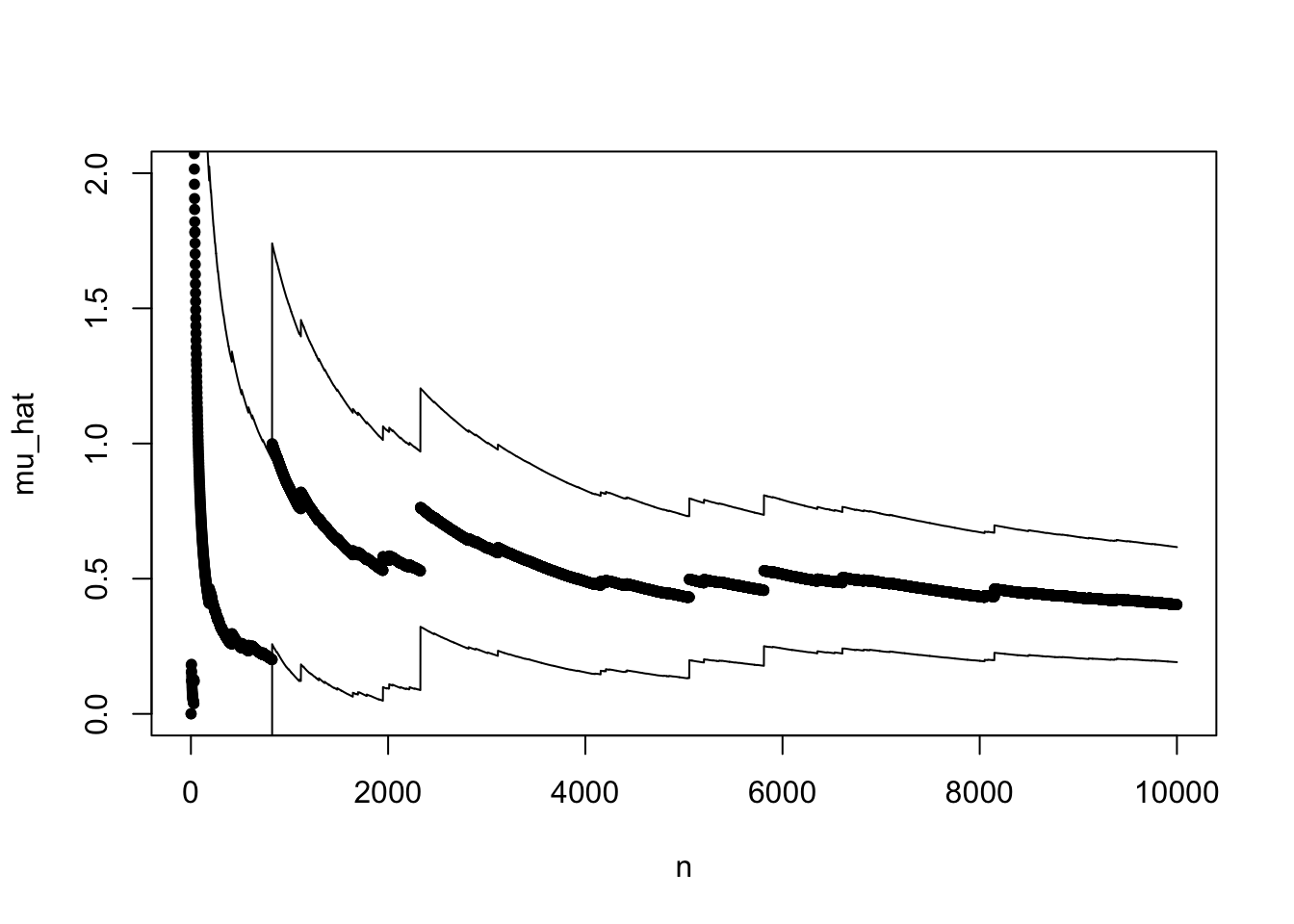

Figure 7.1: Sample path with confidence band for Monte Carlo integration of the mean of a gamma distributed random variable.

7.1.2 Concentration inequalities

If \(X\) is a real valued random variable with finite second moment, \(\mu = E(X)\) and \(\sigma^2 = V(X)\), Chebychev’s inequality holds \[P(|X - \mu| > \varepsilon) \leq \frac {\sigma^2}{\varepsilon^2}\] for all \(\varepsilon > 0\). This inequality implies, for instance, that for the simple Monte Carlo average we have the inequality \[P(|\hat{\mu}_{\textrm{MC}} - \mu| > \varepsilon) \leq \frac{\sigma^2_{\textrm{MC}}}{n\varepsilon^2}.\] A common usage of this inequality is for the qualitative statement known as the law of large numbers: for any \(\varepsilon > 0\) \[P(|\hat{\mu}_{\textrm{MC}} - \mu| > \varepsilon) \rightarrow 0\] for \(n \to \infty\). Or \(\hat{\mu}_{\textrm{MC}}\) converges in probability towards \(\mu\) as \(n\) tends to infinity. However, the inequality actually also provides a quantitative statement about how accurate \(\hat{\mu}_{\textrm{MC}}\) is as an approximation of \(\mu\).

Chebyshev’s inequality is useful due to its minimal assumption of a finite second moment. However, it typically doesn’t give a very tight bound on the probability \(P(|X - \mu| > \varepsilon)\). Much better inequalities can be obtained under stronger assumptions, in particular finite exponential moments.

Assuming that the moment generating function of \(X\) is finite, \(M(t) = E(e^{tX}) < \infty\), for some suitable \(t \in \mathbb{R}\), it follows from Markov’s inequality that \[P(X - \mu > \varepsilon) = P(e^{tX} > e^{t(\varepsilon + \mu)}) \leq e^{-t(\varepsilon + \mu)}M(t),\] which can provide a very tight upper bound by minimizing the bound over \(t\). This requires some knowledge of the moment generating function. We illustrate the usage of this inequality below by considering the gamma distribution where the moment generating function is well known.

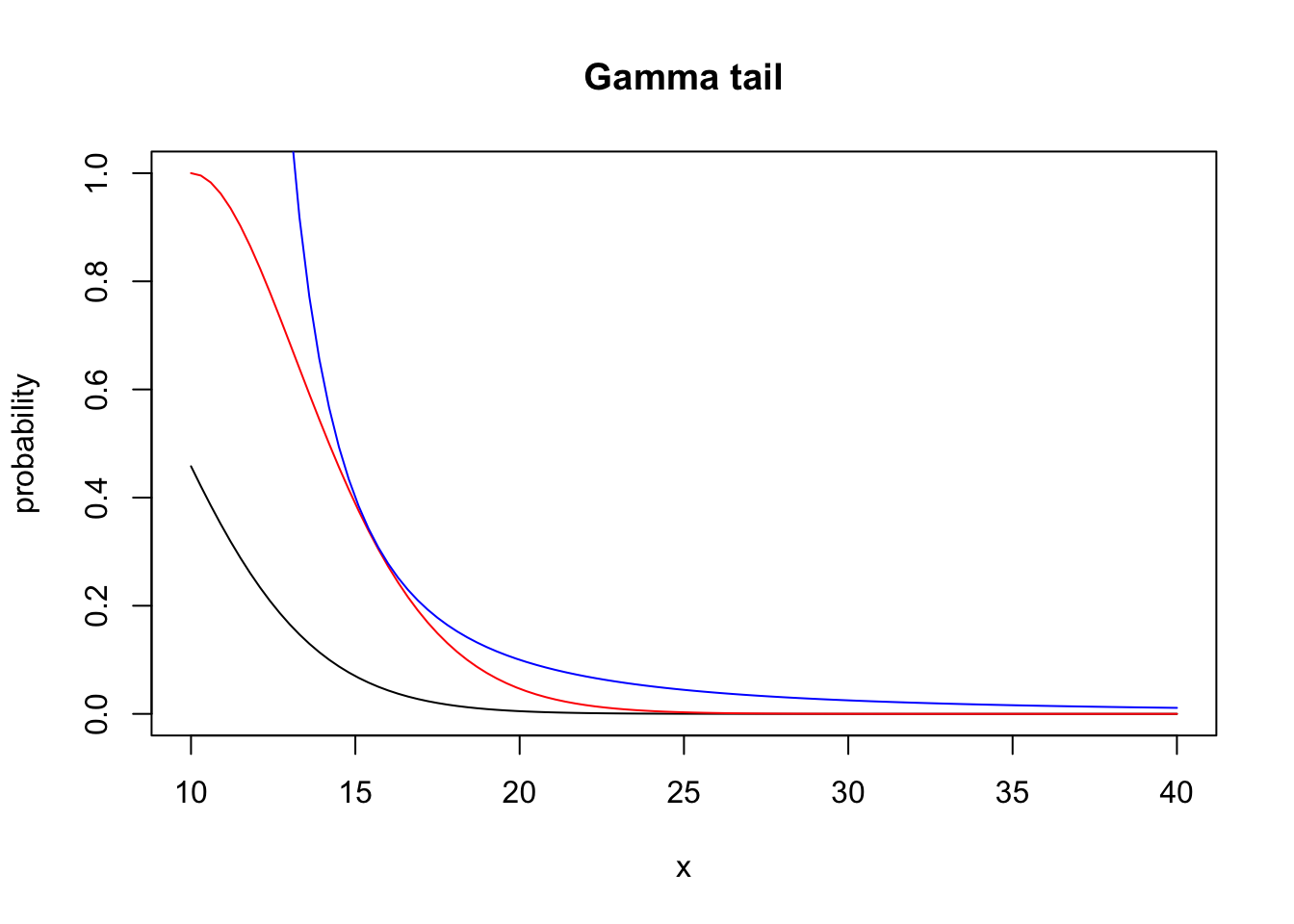

7.1.3 Exponential tail bound for Gamma distributed variables

If \(X\) follows a Gamma distribution with shape parameter \(\lambda > 0\) and \(t <1\), then \[M(t) = \frac{1}{\Gamma(\lambda)} \int_0^{\infty} x^{\lambda - 1} e^{-(1-t) x} \, \mathrm{d} x = \frac{1}{(1-t)^{\lambda}}.\] Whence \[P(X-\lambda > \varepsilon) \leq e^{-t(\varepsilon + \lambda)} \frac{1}{(1-t)^{\lambda}}.\] Minimization over \(t\) of the right hand side gives the minimizer \(t = \varepsilon/(\varepsilon + \lambda)\) and the upper bound \[P(X-\lambda > \varepsilon) \leq e^{-\varepsilon} \left(\frac{\varepsilon + \lambda}{\lambda }\right)^{\lambda}.\] Compare this to the bound \[P(|X-\lambda| > \varepsilon) \leq \frac{\lambda}{\varepsilon^2}\] from Chebychev’s inequality.

Figure 7.2: Actual tail probabilities (left) for the gamma distribution, computed via the pgamma function, compared it to the tight bound (red) and the weaker bound from Chebychev’s inequality (blue). The differences in the tail are more clearly seen for the log-probabilities (right)

7.2 Importance sampling

When we are only interested in Monte Carlo integration, we do not need to sample from the target distribution.

Observe that \[\begin{align} \mu = \int h(x) f(x) \ \mathrm{d}x & = \int h(x) \frac{f(x)}{g(x)} g(x) \ \mathrm{d}x \\ & = \int h(x) w^*(x) g(x) \ \mathrm{d}x \end{align}\]

whenever \(g\) is a density fulfilling that \[g(x) = 0 \Rightarrow f(x) = 0.\]

With \(X_1, \ldots, X_n\) i.i.d. with density \(g\) define the weights \[w^*(X_i) = f(X_i) / g(X_i).\] The importance sampling estimator is \[\hat{\mu}_{\textrm{IS}}^* := \frac{1}{n} \sum_{i=1}^n h(X_i)w^*(X_i).\] It has mean \(\mu\). Again by the LLN \[\hat{\mu}_{\textrm{IS}}^* \rightarrow E(h(X_1) w^*(X_1)) = \mu.\]

We will illustrate the use of importance sampling by computing the mean in the gamma distribution via simulations from a Gaussian distribution, cf. also Section 7.1.1.

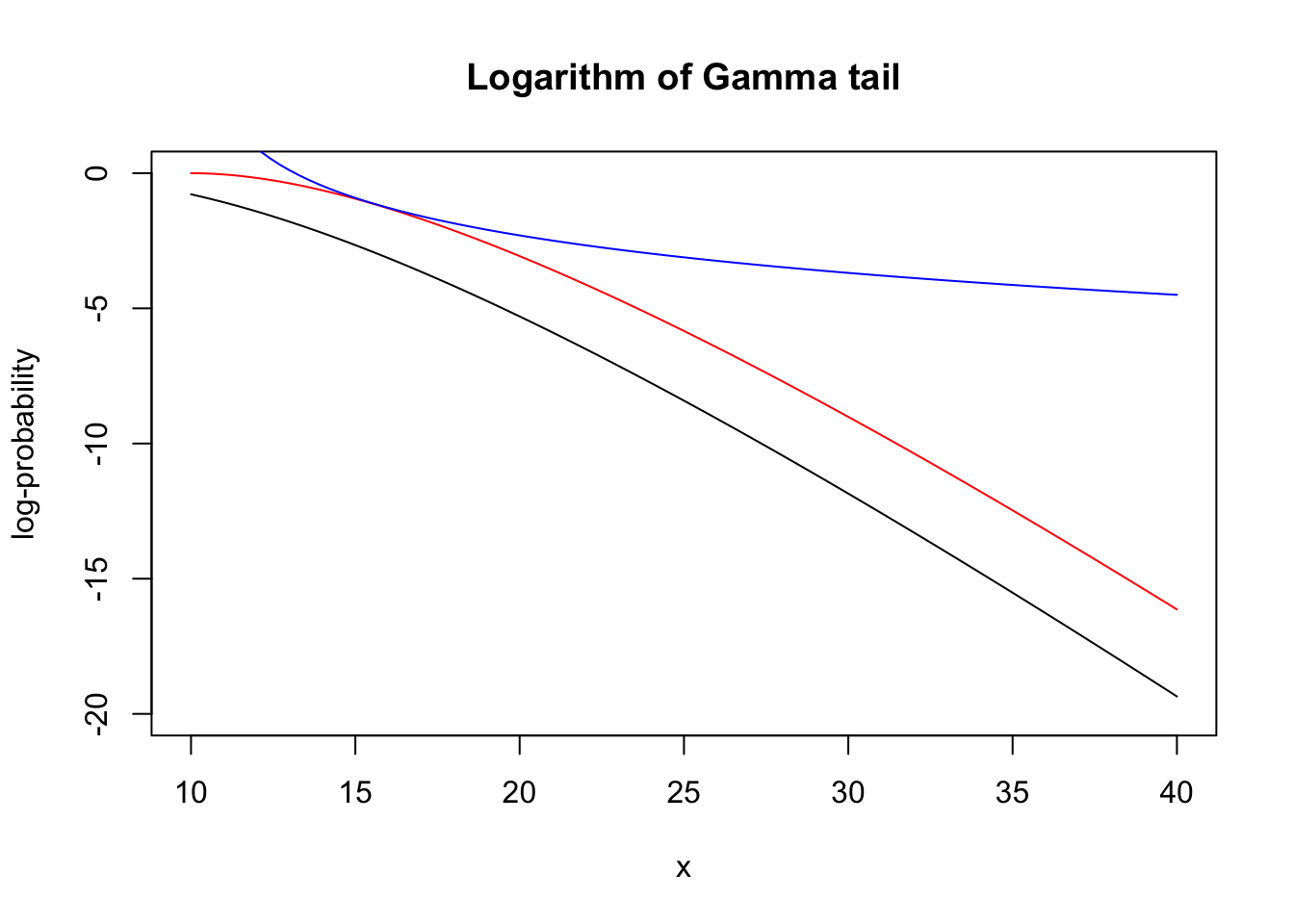

x <- rnorm(n, 10, 3)

w_star <- dgamma(x, 8) / dnorm(x, 10, 3)

mu_hat_IS <- (cumsum(x * w_star) / (1:n))

mu_hat_IS[n] # Theoretical value 8## [1] 7.995To assess the precision of the importance sampling estimate via the CLT we need the variance of the average just as for plain Monte Carlo integration. By the CLT \[\hat{\mu}_{\textrm{IS}}^* \overset{\textrm{approx}} \sim \mathcal{N}(\mu, \sigma^{*2}_{\textrm{IS}} / n)\] where \[\sigma^{*2}_{\textrm{IS}} = V (h(X_1)w^*(X_1)) = \int (h(x) w^*(x) - \mu)^2 g(x) \ \mathrm{d}x.\]

The importance sampling variance can be estimated similarly as the Monte Carlo variance \[\hat{\sigma}^{*2}_{\textrm{IS}} = \frac{1}{n - 1} \sum_{i=1}^n (h(X_i)w^*(X_i) - \hat{\mu}_{\textrm{IS}}^*)^2,\] and a 95% standard confidence interval is computed as \[\hat{\mu}^*_{\textrm{IS}} \pm 1.96 \frac{\hat{\sigma}^*_{\textrm{IS}}}{\sqrt{n}}.\]

sigma_hat_IS <- sd(x * w_star)

sigma_hat_IS # Theoretical value ??## [1] 3.5

Figure 7.3: Sample path with confidence band for importance sampling Monte Carlo integration of the mean of a gamma distributed random variable via simulations from a Gaussian distribution.

It may happen that \(\sigma^{*2}_{\textrm{IS}} > \sigma^2_{\textrm{MC}}\) or \(\sigma^{*2}_{\textrm{IS}} < \sigma^2_{\textrm{MC}}\) depending on \(h\) and \(g\), but by choosing \(g\) cleverly so that \(h(x) w^*(x)\) becomes as constant as possible, importance sampling can often reduce the variance compared to plain Monte Carlo integration.

For the mean of the gamma distribution above, \(\sigma^{*2}_{\textrm{IS}}\) is about 50% larger than \(\sigma^2_{\textrm{MC}}\), so we loose precision by using importance sampling this way when compared to plain Monte Carlo integration. In Section 7.3 we consider a different example where we achieve a considerable variance reduction by using importance sampling.

7.2.1 Unknown normalization constants

If \(f = c^{-1} q\) with \(c\) unknown then \[c = \int q(x) \ \mathrm{d}x = \int \frac{q(x)}{g(x)} g(x) \ d x,\] and \[\mu = \frac{\int h(x) w^*(x) g(x) \ d x}{\int w^*(x) g(x) \ d x},\] where \(w^*(x) = q(x) / g(x).\)

If \(X_1, \ldots, X_n\) are then i.i.d. from the distribution with density \(g\), an importance sampling estimate of \(\mu\) can be computed as \[\hat{\mu}_{\textrm{IS}} = \frac{\sum_{i=1}^n h(X_i) w^*(X_i)}{\sum_{i=1}^n w^*(X_i)} = \sum_{i=1}^n h(X_i) w(X_i),\] where \(w^*(X_i) = q(X_i) / g(X_i)\) and \[w(X_i) = \frac{w^*(X_i)}{\sum_{i=1}^n w^*(X_i)}\] are the standardized weights. This works irrespectively of the value of the normalizing constant \(c\), and it actually works if also \(g\) is unnormalized.

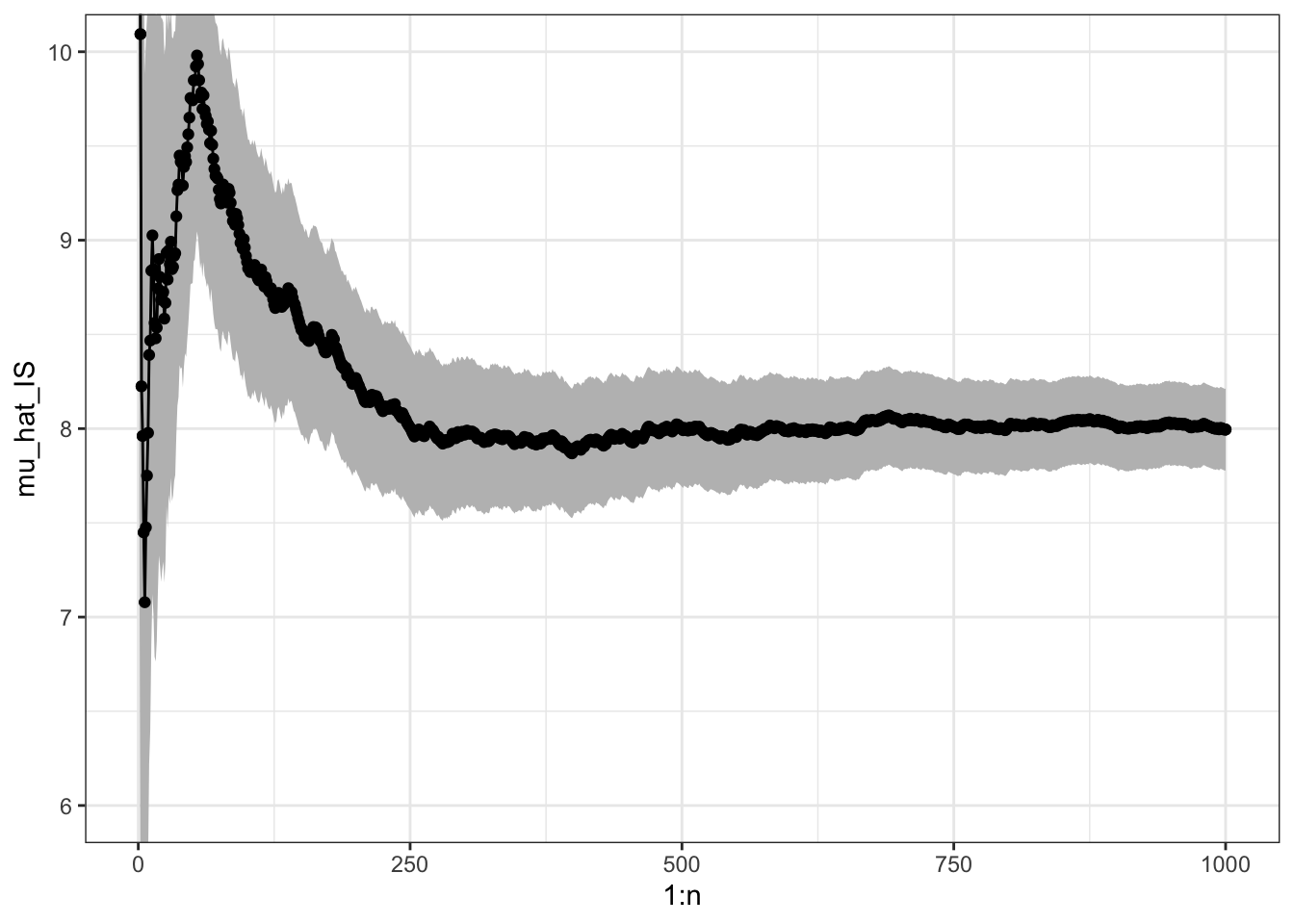

Revisiting the mean of the gamma distribution, we can implement importance sampling via samples from a Gaussian distribution but using weights computed without the normalization constants.

w_star <- numeric(n)

x_pos <- x[x > 0]

w_star[x > 0] <- exp((x_pos - 10)^2 / 18 - x_pos + 7 * log(x_pos))

mu_hat_IS <- cumsum(x * w_star) / cumsum(w_star)

mu_hat_IS[n] # Theoretical value 8## [1] 8.102The variance of the IS estimator with standardized weights is a little more complicated, because the estimator is a ratio of random variables. From the multivariate CLT \[\frac{1}{n} \sum_{i=1}^n \left(\begin{array}{c} h(X_i) w^*(X_i) \\ w^*(X_i) \end{array}\right) \overset{\textrm{approx}}{\sim} \mathcal{N}\left( c \left(\begin{array}{c} \mu \\ {1} \end{array}\right), \frac{1}{n} \left(\begin{array}{cc} \sigma^{*2}_{\textrm{IS}} & \gamma \\ \gamma & \sigma^2_{w^*} \end{array} \right)\right),\] where \[\begin{align} \sigma^{*2}_{\textrm{IS}} & = V(h(X_1)w^*(X_1)) \\ \gamma & = \mathrm{cov}(h(X_1)w^*(X_1), w^*(X_1)) \\ \sigma_{w^*}^2 & = V (w^*(X_1)). \end{align}\]

We can then apply the \(\Delta\)-method with \(t(x, y) = x / y\). Note that \(Dt(x, y) = (1 / y, - x / y^2)\), whence \[Dt(c\mu, c) \left(\begin{array}{cc} \hat{\sigma}^{*2}_{\textrm{IS}} & \gamma \\ \gamma & \sigma^2_{w^*} \end{array} \right) Dt(c\mu, c)^T = c^{-2} (\sigma^{*2}_{\textrm{IS}} + \mu^2 \sigma_{w^*}^2 - 2 \mu \gamma).\]

By the \(\Delta\)-method \[\hat{\mu}_{\textrm{IS}} \overset{\textrm{approx}}{\sim} \mathcal{N}(\mu, c^{-2} (\sigma^{*2}_{\textrm{IS}} + \mu^2 \sigma_{w^*}^2 - 2 \mu \gamma) / n).\] The unknown quantities in the asymptotic variance must be estimated using e.g. their empirical equivalents, and if \(c \neq 1\) (we have used unnormalized densities) it is necessary to estimate \(c\) as \(\hat{c} = \frac{1}{n} \sum_{i=1}^n w^*(X_i)\) to compute an estimate of the variance.

For the example with the mean of the gamma distribution, we find the following estimate of the variance.

c_hat <- mean(w_star)

sigma_hat_IS <- sd(x * w_star)

sigma_hat_w_star <- sd(w_star)

gamma_hat <- cov(x * w_star, w_star)

sigma_hat_IS_w <- sqrt(sigma_hat_IS^2 + mu_hat_IS[n]^2 * sigma_hat_w_star^2 -

2 * mu_hat_IS[n] * gamma_hat) / c_hat

sigma_hat_IS_w## [1] 3.198

Figure 7.4: Sample path with confidence band for importance sampling Monte Carlo integration of the mean of a gamma distributed random variable via simulations from a Gaussian distribution and using standardized weights.

In this example, the variance when using standardized weights is a little lower than using unstandardized weights, but still larger than the variance for the plain Monte Carlo average.

7.2.2 Computing a high-dimensional integral

To further illustrate the usage but also the limitations of Monte Carlo integration, consider the following \(p\)-dimensional integral \[ \int e^{-\frac{1}{2}\left(x_1^2 + \sum_{i=2}^p (x_i - \alpha x_{i-1})^2\right)} \mathrm{d} x.\] Now this integral is not even expressed as an expectation w.r.t. any distribution in the first place – it is an integral w.r.t. Lebesgue measure in \(\mathbb{R}^p\). We use the same idea as in importance sampling to rewrite the integral as an expectation w.r.t. a probability distribution. There might be many ways to do this, and the following is just one.

Rewrite the exponent as \[||x||_2^2 + \sum_{i = 2}^p \alpha^2 x_{i-1}^2 - 2\alpha x_i x_{i-1}\] so that

\[\begin{align*} \int e^{-\frac{1}{2}\left(x_1^2 + \sum_{i=2}^p (x_i - \alpha x_{i-1})^2\right)} \mathrm{d} x & = \int e^{- \frac{1}{2} \sum_{i = 2}^p \alpha^2 x_{i-1}^2 - 2\alpha x_i x_{i-1}} e^{-\frac{||x||_2^2}{2}} \mathrm{d} x \\ & = (2 \pi)^{p/2} \int e^{- \frac{1}{2} \sum_{i = 2}^p \alpha^2 x_{i-1}^2 - 2\alpha x_i x_{i-1}} f(x) \mathrm{d} x \end{align*}\]

where \(f\) is the density for the \(\mathcal{N}(0, I_p)\) distribution. Thus if \(X \sim \mathcal{N}(0, I_p)\), \[ \int e^{-\frac{1}{2}\left(x_1^2 + \sum_{i=2}^p (x_i - \alpha x_{i-1})^2\right)} \mathrm{d} x = (2 \pi)^{p/2} E\left( e^{- \frac{1}{2} \sum_{i = 2}^p \alpha^2 X_{i-1}^2 - 2\alpha X_i X_{i-1}} \right).\]

The Monte Carlo integration below computes \[\mu = E\left( e^{- \frac{1}{2} \sum_{i = 2}^p \alpha^2 X_{i-1}^2 - 2\alpha X_i X_{i-1}} \right)\] by generating \(p\)-dimensional random variables from \(\mathcal{N}(0, I_p)\). It can actually be shown that \(\mu = 1\), but we skip the proof of that.

First, we implement the function we want to integrate.

h <- function(x, alpha = 0.1){

p <- length(x)

tmp <- alpha * x[1:(p - 1)]

exp( - sum((tmp / 2 - x[2:p]) * tmp))

}Then we specify various parameters.

n <- 10000 # The number of random variables to generate

p <- 100 # The dimension of each random variableThe actual computation is implemented using the apply function. We

first look at the case with \(\alpha = 0.1\).

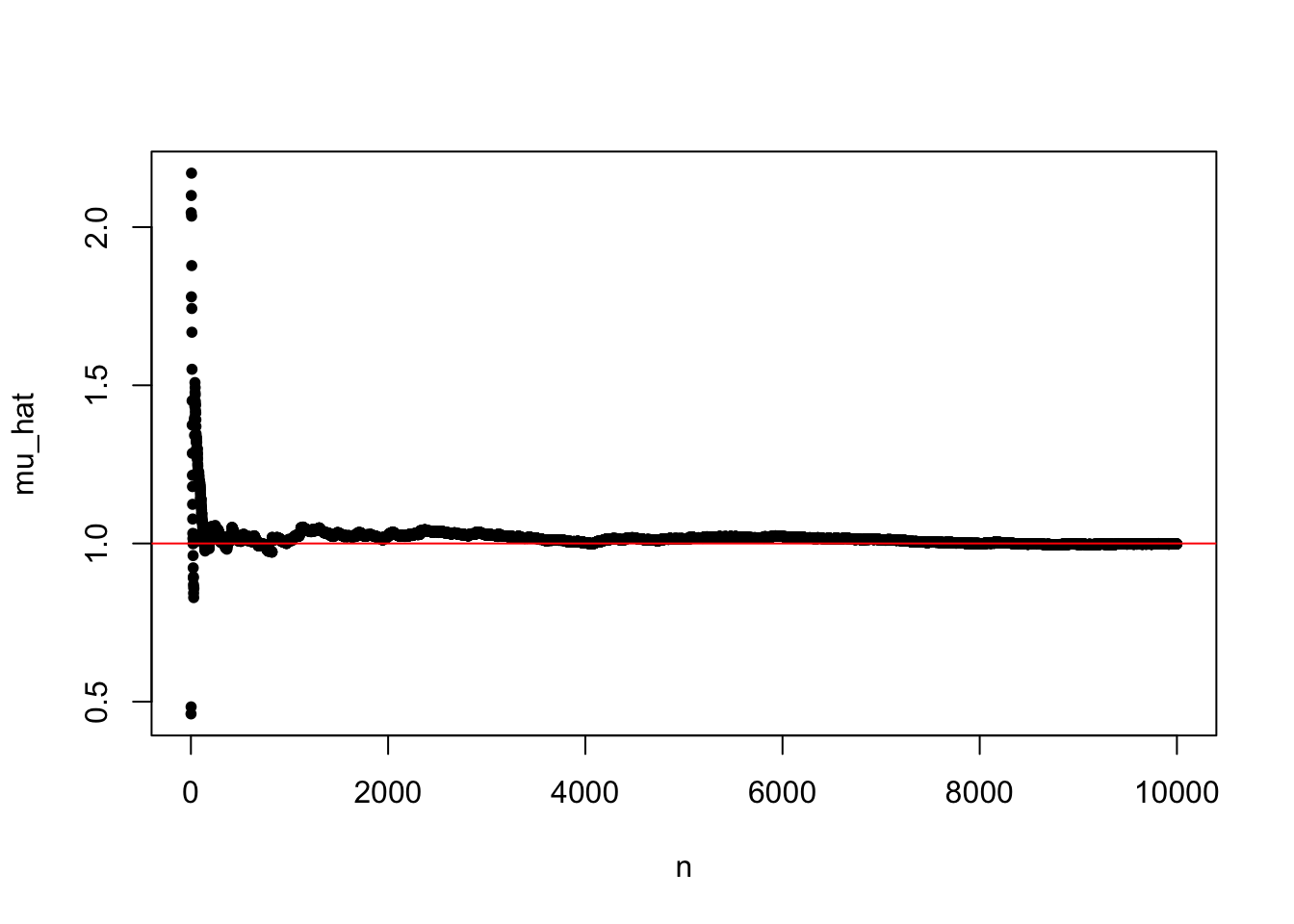

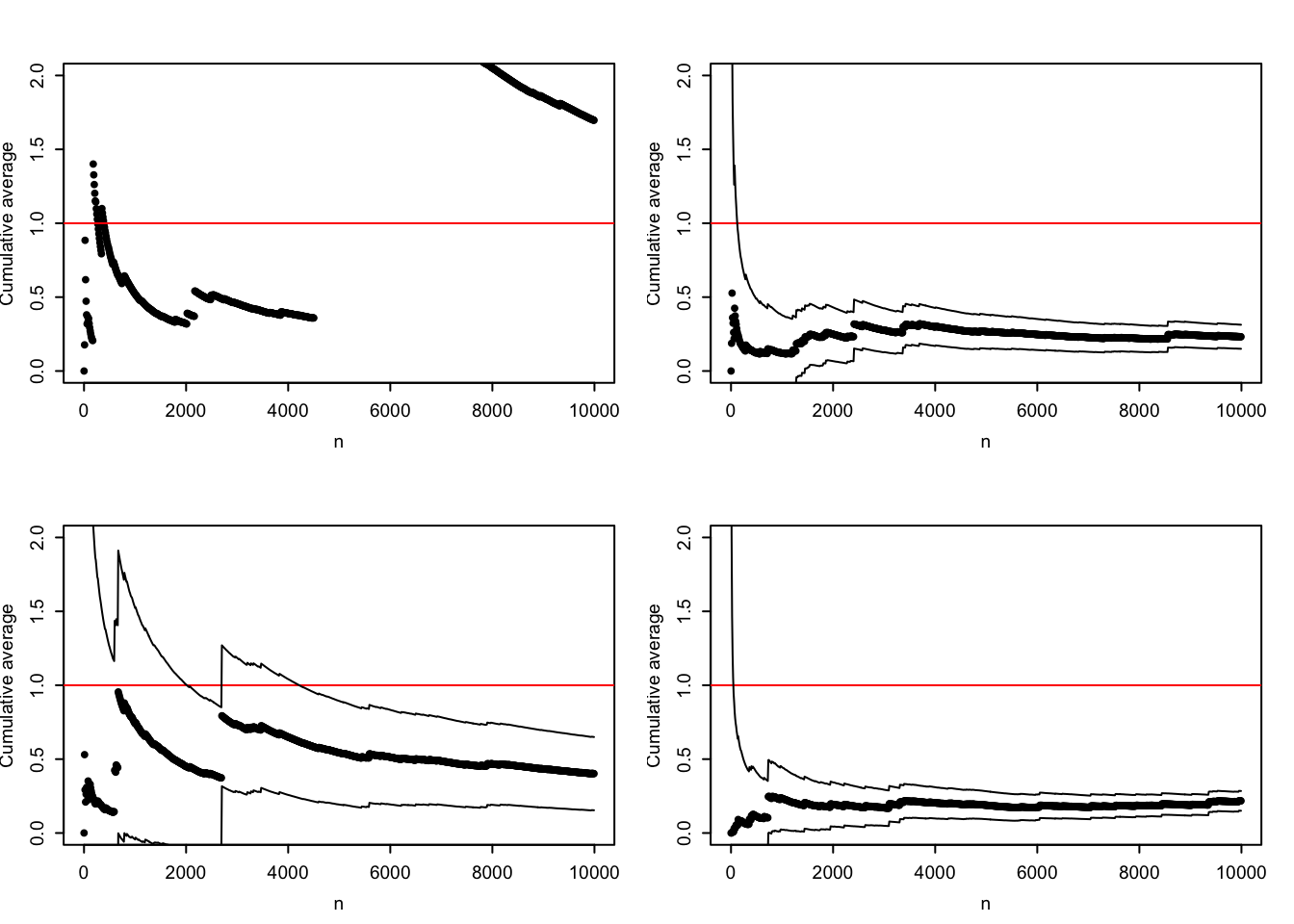

We can then plot the cumulative average and compare it to the actual value of the integral that we know is 1.

If we want to control the error with probability 0.95 we can use Chebychev’s inequality and solve for \(\varepsilon\) using the estimated variance.

plot(mu_hat, pch = 20, xlab = "n")

abline(h = 1, col = "red")

sigma_hat <- sd(evaluations)

epsilon <- sigma_hat / sqrt((1:n) * 0.05)

lines(1:n, mu_hat + epsilon)

lines(1:n, mu_hat - epsilon)

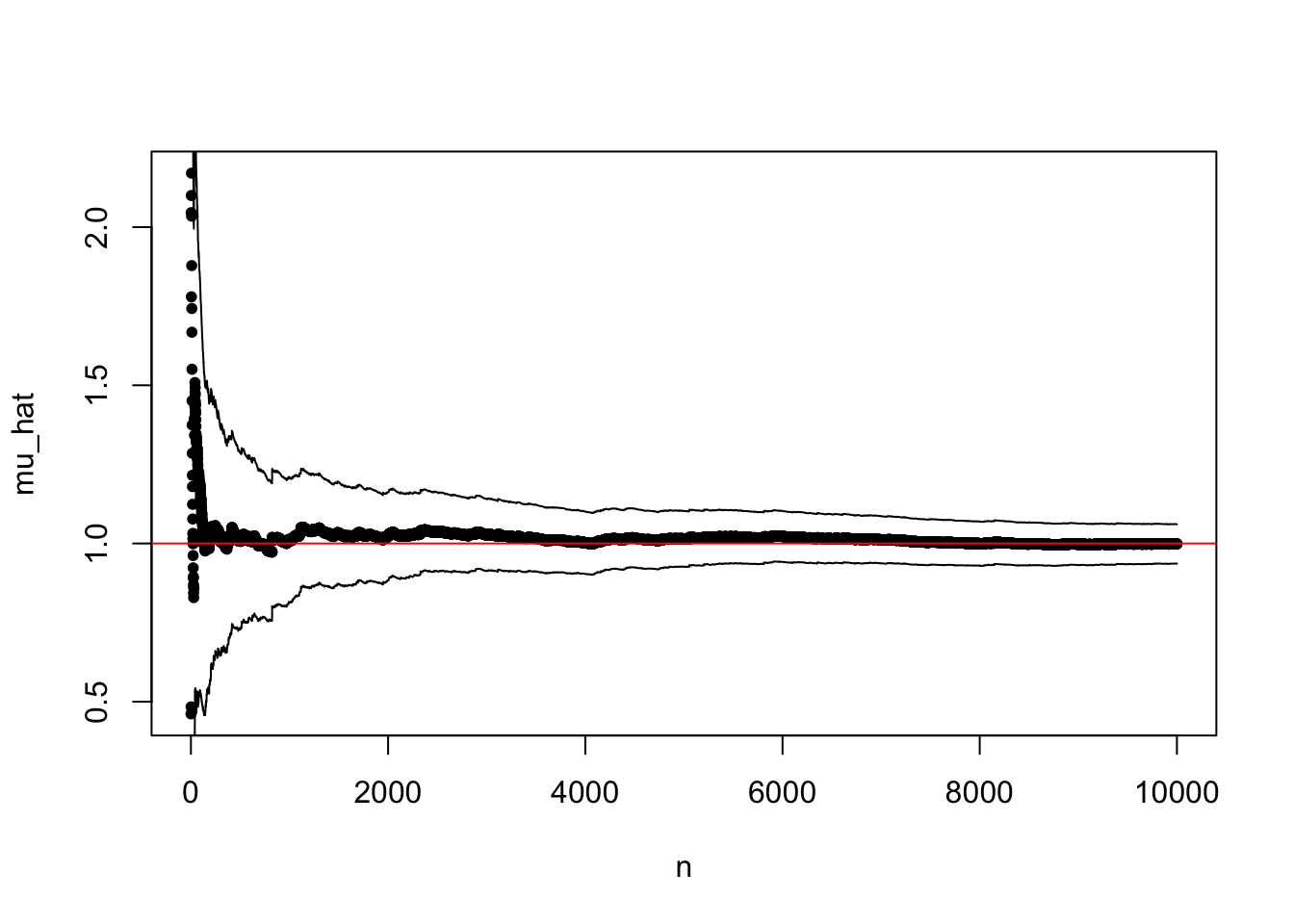

The confidence bands provided by the central limit theorem are typically more accurate estimates of the actual uncertainty than the upper bounds provided by Chebychev’s inequality.

plot(mu_hat, pch = 20, xlab = "n")

abline(h = 1, col = "red")

lines(1:n, mu_hat + 2 * sigma_hat / sqrt(1:n))

lines(1:n, mu_hat - 2 * sigma_hat / sqrt(1:n))

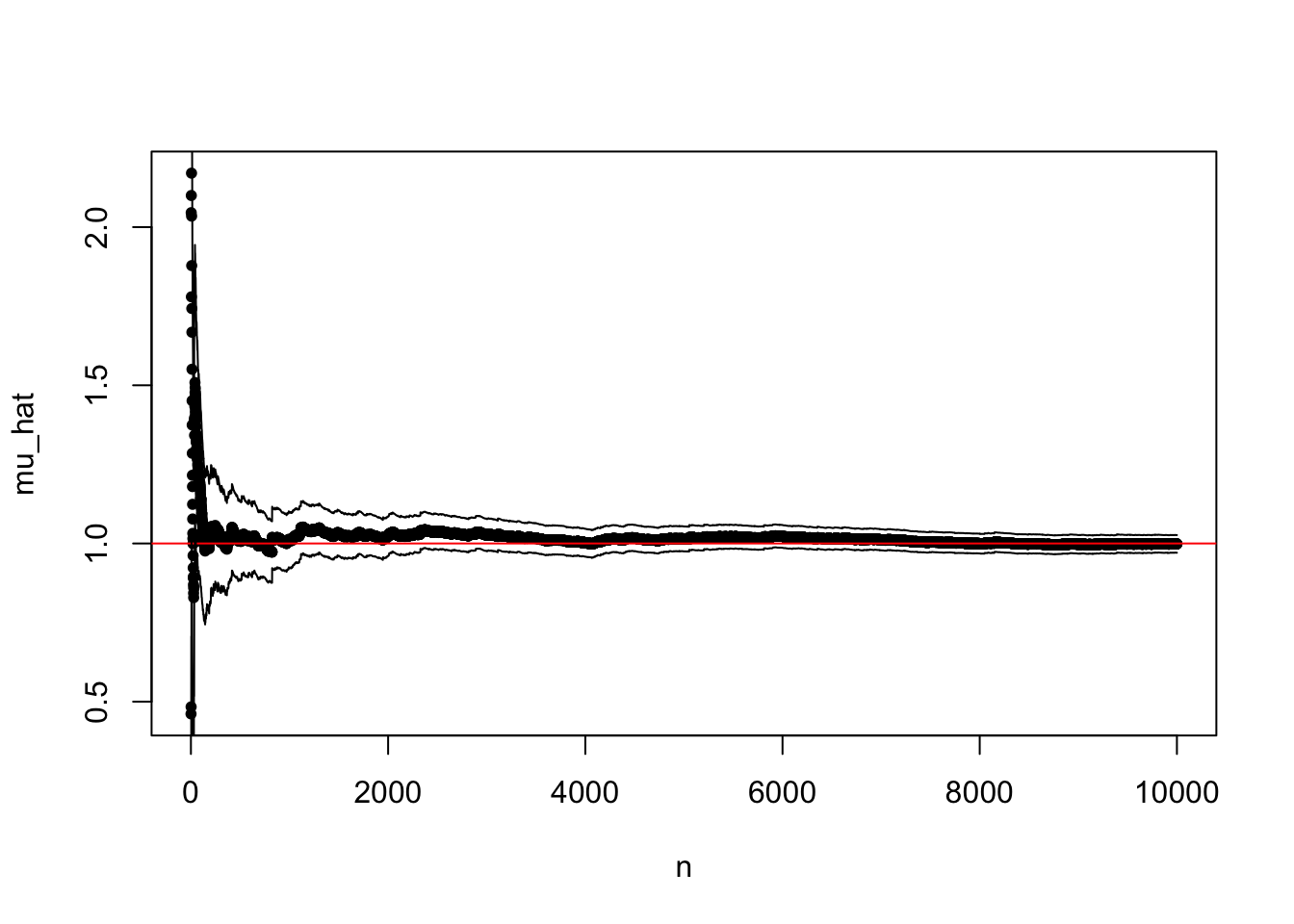

To illustrate the limitations of Monte Carlo integration we increase \(\alpha\) to \(\alpha = 0.4\).

evaluations <- apply(x, 1, h, alpha = 0.4)

The sample path above is not carefully selected to be pathological. Due to occasional large values, the typical sample path will show occasional large jumps, and the variance may easily be grossly underestimated.

Figure 7.5: Four sample paths of the cumulative average for \(\alpha = 0.4\).

To be fair, it is the choice of a standard multivariate normal distribution as the reference distribution for large \(\alpha\) that is problematic rather than Monte Carlo integration and importance sampling as such. However, in high dimensions it can be difficult to choose a suitable distribution to sample from.

The difficulty for the specific integral is due to the exponent of the integrand, which can become large and positive if \(x_i \approx x_{i-1}\) for enough coordinates. This happens rarely for independent random variables, but large values of rare events can, nevertheless, contribute notably to the integral. The larger \(\alpha \in (0, 1)\) is, the more pronounced is the problem with occasional large values of the integrand. It is possible to use importance sampling and instead sample from a distribution where the large values are more likely. For this particular example we would need to simulate from a distribution where the variables are dependent, and we will not pursue that.

7.3 Network failure

In this section we consider a more serious application of importance sampling. Though still a toy example, where we can find an exact solution, the example illustrates well the type of application where we want to approximate a small probability using a Monte Carlo average. Importance sampling can then increase the probability of the rare event and as a result make the variance of the Monte Carlo average smaller.

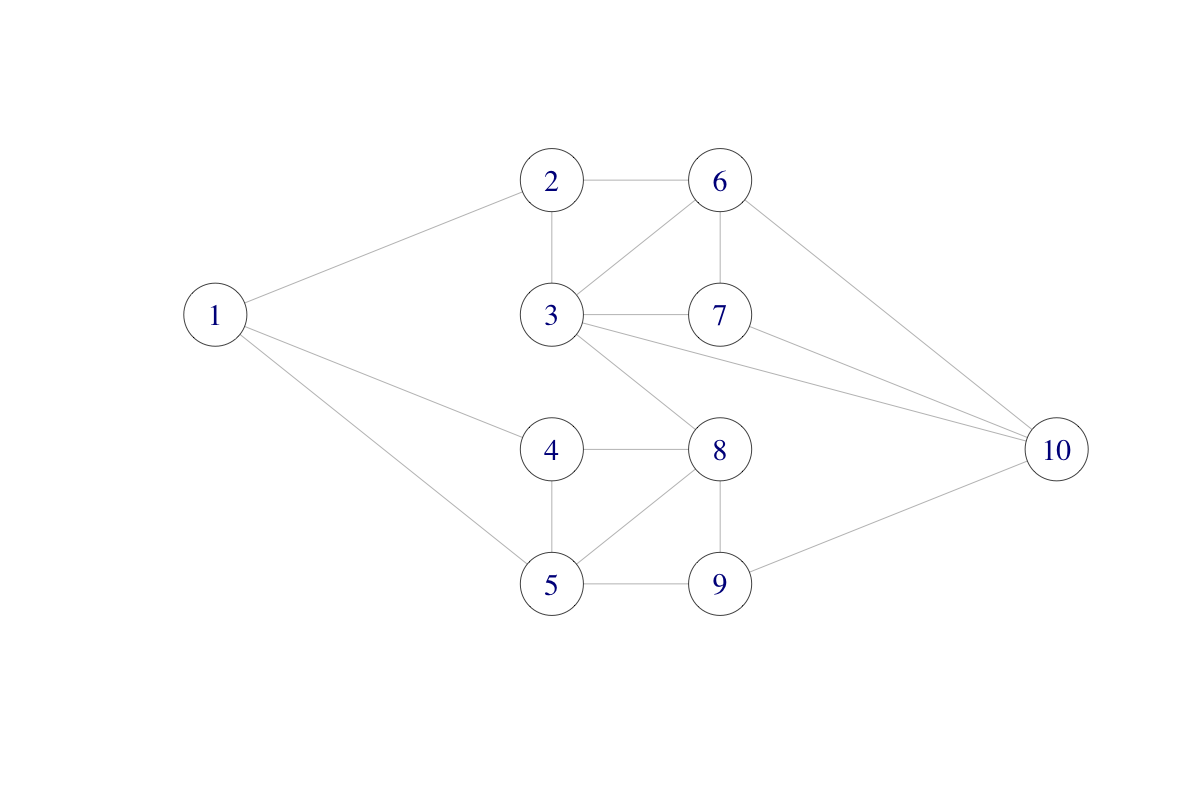

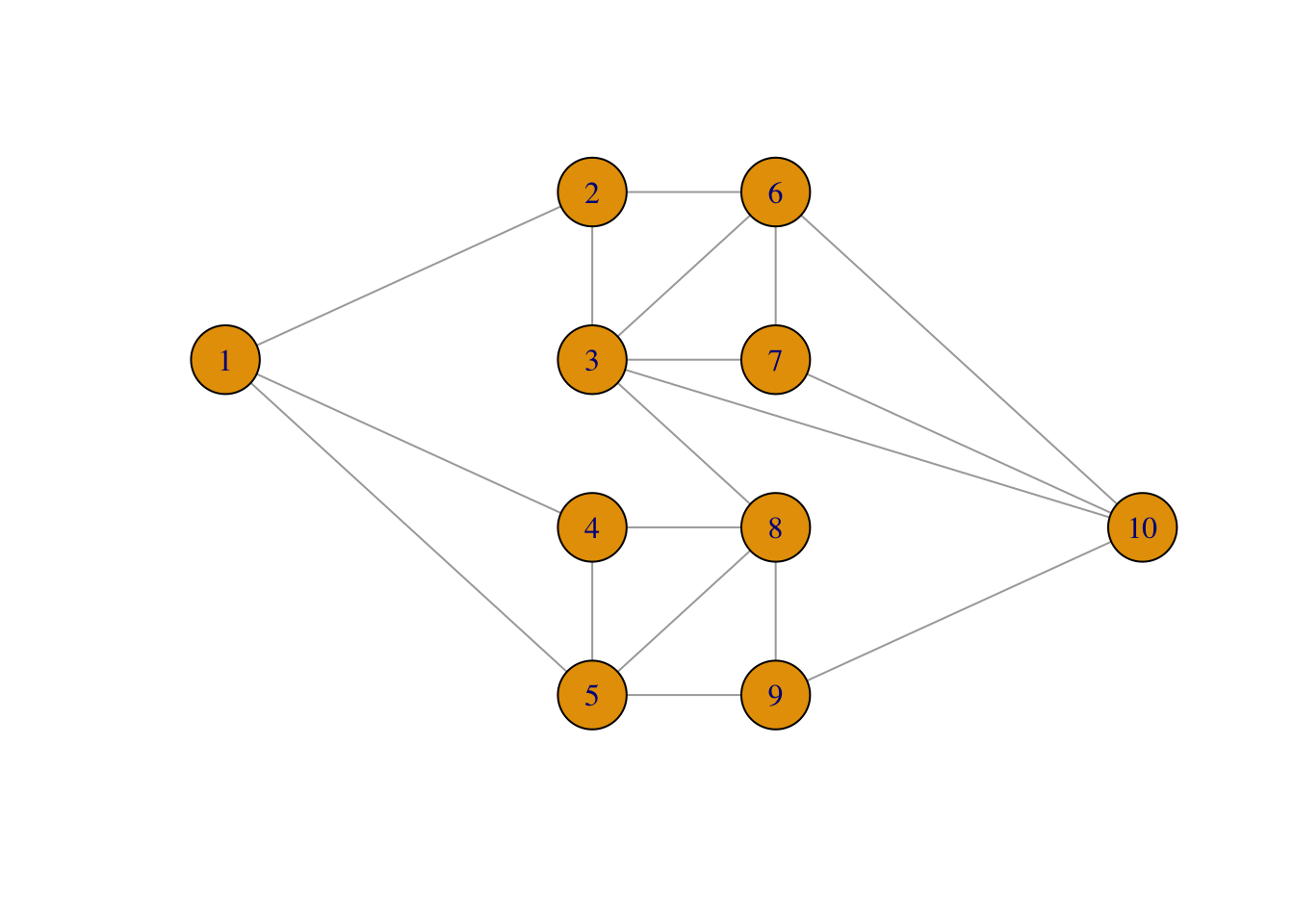

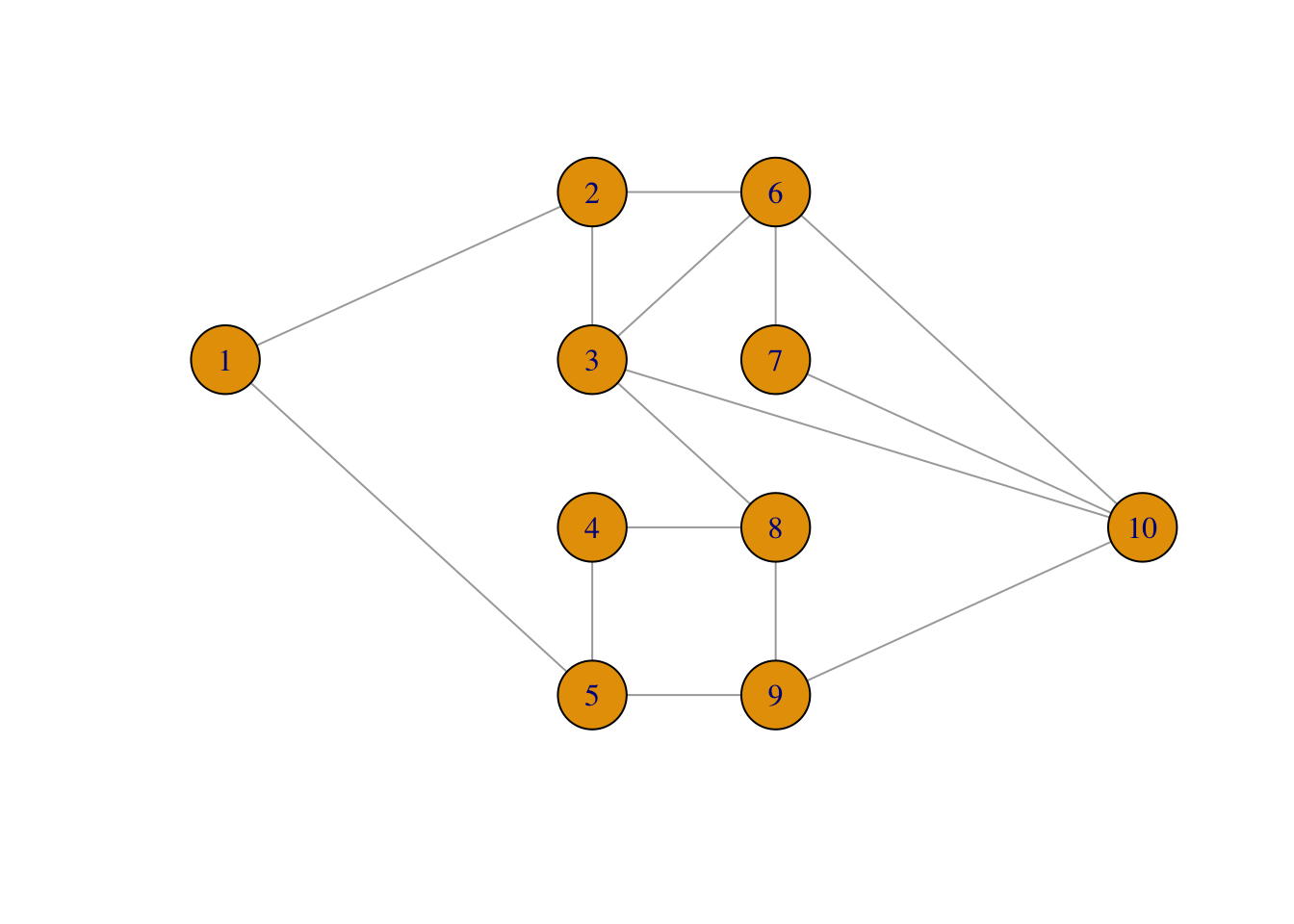

We will consider the following network consisting of ten nodes and with some of the nodes connected.

The network could be a computer network with ten computers. The different connections (edges) may “fail” independently with probability \(p\), and we ask the question: what is the probability that node 1 and node 10 are disconnected?

We can answer this question by computing an integral of an indicator function, that is, by computing the sum \[\mu = \sum_{x \in \{0,1\}^{18}} 1_B(x) f_p(x)\] where \(f_p(x)\) is the point probability of \(x\), with \(x\) representing which of the 18 edges in the graph that fail, and \(B\) represents the set of edges where node 1 and node 10 are disconnected. By simulating edge failures we can approximate the sum as a Monte Carlo average.

The network of nodes can be represented as a graph adjacency matrix \(A\) such that \(A_{ij} = 1\) if and only if there is an edge between \(i\) and \(j\) (and \(A_{ij} = 0\) otherwise).

A # Graph adjacency matrix## [,1] [,2] [,3] [,4] [,5] [,6] [,7] [,8] [,9] [,10]

## [1,] 0 1 0 1 1 0 0 0 0 0

## [2,] 1 0 1 0 0 1 0 0 0 0

## [3,] 0 1 0 0 0 1 1 1 0 1

## [4,] 1 0 0 0 1 0 0 1 0 0

## [5,] 1 0 0 1 0 0 0 1 1 0

## [6,] 0 1 1 0 0 0 1 0 0 1

## [7,] 0 0 1 0 0 1 0 0 0 1

## [8,] 0 0 1 1 1 0 0 0 1 0

## [9,] 0 0 0 0 1 0 0 1 0 1

## [10,] 0 0 1 0 0 1 1 0 1 0To compute the probability that 1 and 10 are disconnected by Monte Carlo integration, we need to sample (sub)graphs by randomly removing some of the edges. This is implemented using the upper triangular part of the (symmetric) adjacency matrix.

sim_net <- function(Aup, p) {

ones <- which(Aup == 1)

Aup[ones] <- sample(

c(0, 1),

length(ones),

replace = TRUE,

prob = c(p, 1 - p)

)

Aup

}The core of the implementation above uses the sample() function, which

can sample with replacement from the set \(\{0, 1\}\). The vector ones

contains indices of the (upper triangular part of the) adjacency matrix

containing a 1, and these positions are replaced by the sampled values before

the matrix is returned.

It is fairly fast to sample even a large number of random graphs this way.

Aup <- A

Aup[lower.tri(Aup)] <- 0

bench::bench_time(replicate(1e5, {sim_net(Aup, 0.5); NULL}))## process real

## 690ms 682msThe second function we implement checks network connectivity based on the upper triangular part of the adjacency matrix. It relies on the fact that there is a path from node 1 to node 10 consisting of \(k\) edges if and only if \((A^k)_{1,10} > 0\). We see directly that such a path needs to consist of at least \(k = 3\) edges. Also, we don’t need to check paths with more than \(k = 9\) edges as they will contain the one node multiple times and can thus be shortened.

discon <- function(Aup) {

A <- Aup + t(Aup)

i <- 3

Apow <- A %*% A %*% A # A%^%3

while(Apow[1, 10] == 0 & i < 9) {

Apow <- Apow %*% A

i <- i + 1

}

Apow[1, 10] == 0 # TRUE if nodes 1 and 10 not connected

}We then obtain the following estimate of the probability of nodes 1 and 10 being disconnected using Monte Carlo integration.

seed <- 27092016

set.seed(seed)

n <- 1e5

tmp <- replicate(n, discon(sim_net(Aup, 0.05)))

mu_hat <- mean(tmp)As this is a random approximation, we should report not only the Monte Carlo estimate but also the confidence interval. Since the estimate is an average of 0-1-variables, we can estimate the variance, \(\sigma^2\), of the individual terms using that \(\sigma^2 = \mu (1 - \mu)\). We could just as well have used the empirical variance, which would give almost the same numerical value as \(\hat{\mu} (1 - \hat{\mu})\), but we use the latter estimator to illustrate that any (good) estimator of \(\sigma^2\) can be used when estimating the asymptotic variance.

## [1] 0.000226 0.000340 0.000454The estimated probability is low and only in about 1 of 3000 simulated graphs will node 1 and 10 be disconnected. This suggests that importance sampling can be useful if we sample from a probability distribution with a larger probability of edge failure.

To implement importance sampling we note that the point probabilities (the density w.r.t. counting measure) for sampling the 18 independent 0-1-variables \(x = (x_1, \ldots, x_{18})\) with \(P(X_i = 0) = p\) is

\[f_p(x) = p^{18 - s} (1- p)^{s}\]

where \(s = \sum_{i=1}^{18} x_i\). In the implementation, weights are computed that correspond to using probability \(p_0\) (with density \(g = f_{p_0}\)) instead of \(p\), and the weights are only computed if node 1 and 10 are disconnected.

weights <- function(Aup, Aup0, p0, p) {

w <- discon(Aup0)

if (w) {

s <- sum(Aup0)

w <- (p / p0)^18 * (p0 * (1 - p) / (p * (1 - p0)))^s

}

as.numeric(w)

}The implementation uses the formula

\[ w(x) = \frac{f_p(x)}{f_{p_0}(x)} = \frac{p^{18 - s} (1- p)^{s}}{p_0^{18 - s} (1- p_0)^{s}} = \left(\frac{p}{p_0}\right)^{18} \left(\frac{p_0 (1- p)}{p (1- p_0)}\right)^s. \]

The importance sampling estimate of \(\mu\) is then computed.

set.seed(seed)

tmp <- replicate(n, weights(Aup, sim_net(Aup, 0.2), 0.2, 0.05))

mu_hat_IS <- mean(tmp)And we obtain the following confidence interval using the empirical variance estimate \(\hat{\sigma}^2\).

## [1] 0.000262 0.000296 0.000330The ratio of estimated variances for the plain Monte Carlo estimate and the importance sampling estimate is

mu_hat * (1 - mu_hat) / var(tmp)## [1] 11.22Thus we need around 11 times more samples if using plain Monte Carlo integration when compared to importance sampling to obtain the same precision. A benchmark will show that the extra computing time for importance sampling is small compared to the reduction of variance. It is therefore worth the coding effort if used repeatedly, but not if it is a one-off computation.

The graph is, in fact, small enough for complete enumeration and thus the computation

of an exact solution. There are \(2^{18} = 262,144\) different networks with

any number of the edges failing. To

systematically walk through all possible combinations of edges failing,

we use the function intToBits that converts an integer to its binary

representation for integers from 0 to 262,143. This is a quick and convenient

way of representing all the different fail and non-fail combinations

for the edges.

ones <- which(Aup == 1)

Atmp <- Aup

p <- 0.05

prob <- numeric(2^18)

for(i in 0:(2^18 - 1)) {

on <- as.numeric(intToBits(i)[1:18])

Atmp[ones] <- on

if (discon(Atmp)) {

s <- sum(on)

prob[i + 1] <- p^(18 - s) * (1 - p)^s

}

}The probability that nodes 1 and 10 are disconnected can then be computed as

the sum of all the probabilities in prob.

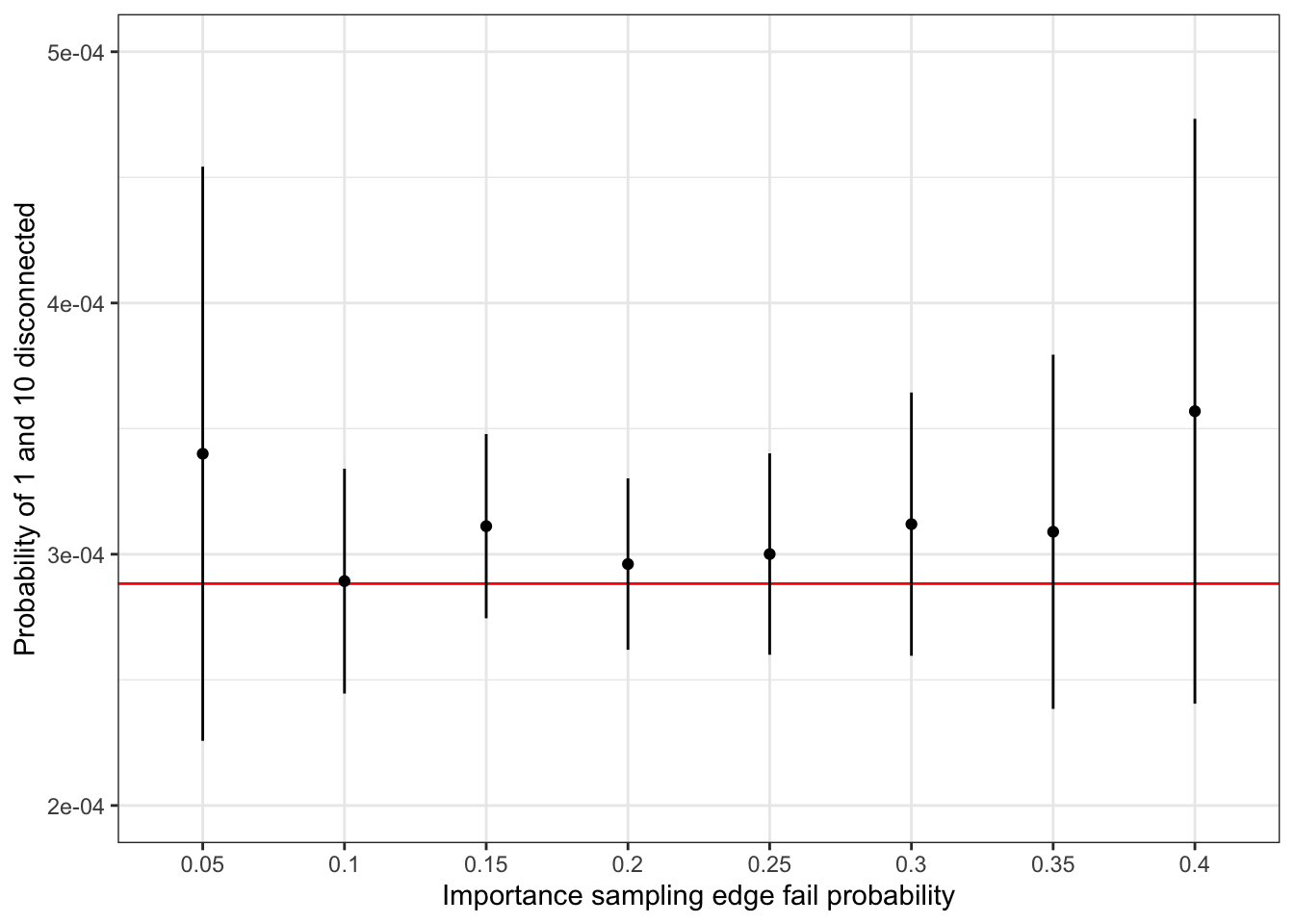

sum(prob)## [1] 0.0002883This number should be compared to the estimates computed above. For a more complete comparison, we have used importance sampling with edge fail probability ranging from 0.1 to 0.4, see Figure 7.6. The results show that a failure probability of 0.2 is close to optimal in terms of giving an importance sampling estimate with minimal variance. For smaller values, the event that 1 and 10 become disconnected is too rare, and for larger values the importance weights become too variable. A choice of 0.2 strikes a good balance.

Figure 7.6: Confidence intervals for importance sampling estimates of network nodes 1 and 10 being disconnected under independent edge failures with probability 0.05. The red line is the true probability computed by complete enumeration.

7.3.1 Object oriented implementations

Implementations of algorithms that handle graphs and simulate graphs, as in the Monte Carlo

computations above, can benefit from using an object oriented approach. To

this end we first implement a so-called constructor, which is a function

that takes the adjacency matrix and the edge failure probability as arguments and

returns a list with class label network.

network <- function(A, p) {

Aup <- A

Aup[lower.tri(Aup)] <- 0

ones <- which((Aup == 1))

structure(

list(

A = A,

Aup = Aup,

ones = ones,

p = p

),

class = "network"

)

}We use the constructor function network() to construct and object of class

network for our specific adjacency matrix.

my_net <- network(A, p = 0.05)

str(my_net) ## List of 4

## $ A : num [1:10, 1:10] 0 1 0 1 1 0 0 0 0 0 ...

## $ Aup : num [1:10, 1:10] 0 0 0 0 0 0 0 0 0 0 ...

## $ ones: int [1:18] 11 22 31 41 44 52 53 63 66 73 ...

## $ p : num 0.05

## - attr(*, "class")= chr "network"

class(my_net)## [1] "network"The network object contains, in addition to A and p, two precomputed components:

the upper triangular part of the adjacency matrix; and the indices in that matrix

containing a 1.

The intention is then to write two methods for the network class. A method sim() that will

simulate a graph where some edges have failed, and a method failure() that will

estimate the probability of node 1 and 10 being disconnected by Monte Carlo

integration. To do so we need to define the two corresponding generic functions.

The method for simulation is then implemented as a function with name

sim.network.

sim.network <- function(x) {

Aup <- x$Aup

Aup[x$ones] <- sample(

c(0, 1),

length(x$ones),

replace = TRUE,

prob = c(x$p, 1 - x$p)

)

Aup

}It is implemented using essentially the same implementation as sim_net()

except that Aup and p are extracted as components from the object x

instead of being arguments, and ones is extracted from x as well instead

of being computed. One could argue that the sim() method should return an object

of class network — that would be natural. However, then we need to call the

constructor with the full adjacency matrix, this will take some time and we do

not want to do that as a default. Thus we simply return the upper triangular

part of the adjacency matrix from sim().

The failure() method implements plain Monte Carlo integration as well

as importance sampling and returns a vector containing the estimate as

well as the 95% confidence interval. This implementation relies on the

already implemented functions discon() and weights().

failure.network <- function(x, n, p0 = NULL) {

if (is.null(p0)) {

# Plain Monte Carlo simulation

tmp <- replicate(n, discon(sim(x)))

mu_hat <- mean(tmp)

se <- sqrt(mu_hat * (1 - mu_hat) / n)

} else {

# Importance sampling

p <- x$p

x$p <- p0

tmp <- replicate(n, weights(x$Aup, sim(x), p0, p))

se <- sd(tmp) / sqrt(n)

mu_hat <- mean(tmp)

}

value <- mu_hat + 1.96 * se * c(-1, 0, 1)

names(value) <- c("low", "estimate", "high")

value

}We test the implementation against the previously computed results.

set.seed(seed) # Resetting seed

failure(my_net, n)## low estimate high

## 0.000226 0.000340 0.000454

set.seed(seed) # Resetting seed

failure(my_net, n, p0 = 0.2)## low estimate high

## 0.000262 0.000296 0.000330We find that these are the same numbers as computed above, thus the object oriented implementation concurs with the non-object oriented on this example.

We benchmark the object oriented implementation to measure if there is

any runtime loss or improvement due to using objects.

One should expect a small computational overhead due to method dispatching,

that is, the procedure that R uses to look up the appropriate sim() method

for an object of class network. On the other hand, sim() does not recompute

ones every time.

bench::mark(

sim_net = sim_net(Aup, 0.05),

sim = sim(my_net),

check = FALSE

)## # A tibble: 2 × 6

## expression min median `itr/sec` mem_alloc `gc/sec`

## <bch:expr> <bch:tm> <bch:tm> <dbl> <bch:byt> <dbl>

## 1 sim_net 5.21µs 6.07µs 152684. 4.39KB 0

## 2 sim 5.49µs 6.23µs 148928. 1.02KB 14.9From the benchmark, the object oriented solution using sim() appears to be

a bit slower than sim_net() despite the latter recomputing ones, and

this can be explained by method dispatching taking of the order of 300 nanoseconds

during these benchmark computations.

Once we have taken an object oriented approach, we can also implement methods

for some standard generic functions, e.g. the print function. As this generic

function already exists, we simply need to implement a method for class network.

print.network <- function(x) {

cat("#vertices: ", nrow(x$A), "\n")

cat("#edges:", sum(x$Aup), "\n")

cat("p = ", x$p, "\n")

}

my_net # Implicitly calls 'print'## #vertices: 10

## #edges: 18

## p = 0.05Our print method now prints out some summary information about the graph instead of just the raw list.

If you want to work more seriously with graphs, it is likely that you want to use an existing R package instead of reimplementing many graph algorithms. One of these packages is igraph, which also illustrates well an object oriented implementation of graph classes in R.

We start by constructing a new graph object from the adjacency matrix.

net <- graph_from_adjacency_matrix(A, mode = "undirected")

class(net)## [1] "igraph"

net # Illustrates the print method for objects of class 'igraph'## IGRAPH 872c4e8 U--- 10 18 --

## + edges from 872c4e8:

## [1] 1-- 2 1-- 4 1-- 5 2-- 3 2-- 6 3-- 6 3-- 7 3-- 8 3--10 4-- 5 4-- 8 5-- 8

## [13] 5-- 9 6-- 7 6--10 7--10 8-- 9 9--10The igraph package supports a vast number of graph computation, manipulation and visualization tools. We will here illustrate how igraph can be used to plot the graph and how we can implement a simulation method for objects of class igraph.

You can use plot(net), which will call the plot method for objects of class igraph.

But before doing so, we will specify a layout of the graph.

# You can generate a layout ...

net_layout <- layout_(net, nicely())

# ... or you can specify one yourself

net_layout <- matrix(

c(-20, 1,

-4, 3,

-4, 1,

-4, -1,

-4, -3,

4, 3,

4, 1,

4, -1,

4, -3,

20, -1),

ncol = 2, nrow = 10, byrow = TRUE)The layout we have specified makes it easy to recognize the graph.

plot(net, layout = net_layout, asp = 0)

We then use two functions from the igraph package to implement simulation of

a new graph. We need gsize(), which gives the number of edges in the graph,

and we need delete_edges(), which removes edges from the graph. Otherwise

the simulation is still based on sample().

sim.igraph <- function(x, p) {

deledges <- sample(

c(TRUE, FALSE),

gsize(x), # 'gsize()' returns the number of edges

replace = TRUE,

prob = c(p, 1 - p)

)

delete_edges(x, which(deledges))

}Note that this method is also called sim(), yet there is no conflict here with

the method for objects of class network because the generic sim() function

will delegate the call to the correct method based on the objects class label.

If we combine our new sim() method for igraphs with the plot method, we can

plot a simulated graph.

plot(sim(net, 0.25), layout = net_layout, asp = 0)

The implementation using igraph turns out to be a little slower than using

the matrix representation alone as in sim_net().

system.time(replicate(1e5, {sim(net, 0.05); NULL}))## user system elapsed

## 3.063 1.918 5.494One could also implement the function for testing if nodes 1 and 10 are

disconnected using the shortest_paths() function, but this is not faster

than the simple matrix multiplications used in discon() either. However, one

should be careful not to draw any general conclusions from this. Our graph is

admittedly a small toy example, and we implemented our solutions largely

to handle this particular graph.